Blue Waters Webinars | Charm++ and Adaptive MPI

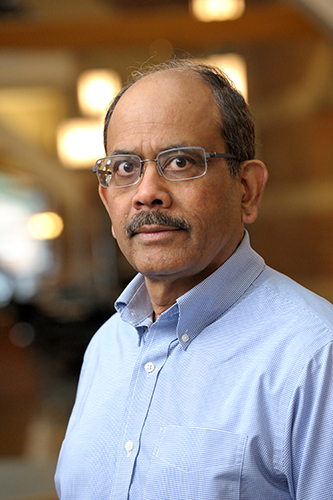

Professor Laxmikant (Sanjay) Kale is the director of the Parallel Programming Laboratory and the Paul and Cynthia Saylor Professor of Computer Science at the University of Illinois at Urbana-Champaign. Prof. Kale has been working on various aspects of parallel computing, with a focus on enhancing performance and productivity via adaptive runtime systems, and with the belief that only interdisciplinary research involving multiple CSE and other applications can bring back well-honed abstractions into Computer Science that will have a long-term impact on the state-of-art. His collaborations include the widely-used Gordon-Bell award-winning (SC'2002) biomolecular simulation program NAMD, and other collaborations on computational cosmology, quantum chemistry, rocket simulation, contagion, space-time meshes, and other unstructured mesh applications. He takes pride in his group's success in distributing and supporting software embodying his research ideas, including Charm++, Adaptive MPI, and the BigSim framework. His team won the HPC Challenge award at Supercomputing 2011, for their entry based on Charm++. Prof. Kale received the B.Tech degree in Electronics Engineering from Benares Hindu University, Varanasi, India in 1977, and an M.E. degree in Computer Science from Indian Institute of Science in Bangalore, India, in 1979. He received a Ph.D. in computer science in from State University of New York, Stony Brook, in 1985. He worked as a scientist at the Tata Institute of Fundamental Research from 1979 to 1981. Since 1985, he has been on the faculty of the University of Illinois at Urbana-Champaign. Sanjay is an ACM and IEEE fellow, and a winner of the 2012 IEEE Sidney Fernbach award.

Modern supercomputers, as well as clusters, present several challenges to effectively program parallel applications: exposing concurrency, optimizing data movement, controlling load imbalance, addressing heterogeneity, handling variations in application behavior, , tolerating system failures, handling multi-physics applications, in-situ data analysis etc. By leveraging Charm++ parallel programming system, application developers have been able to successfully address these challenges and efficiently run their code on large supercomputers. The foundational concepts underlying Charm++ are overdecomposition, asynchrony, migratability, and adaptivity. A Charm++ program specifies collections of interacting C++ objects, which are assigned to processors dynamically by the runtime system. Charm++ provides an asynchronous, message-driven, task-based programming model with migratable objects and an adaptive runtime system that controls execution. It automates communication overlap, load balance, fault tolerance, checkpoints for split-execution, power management, MPI interoperation, and promotes modularity. Adaptive MPI is an MPI implementation using Charm++ that provides the same capabilities to MPI applications. In this webinar, I will explain the foundational concepts in Charm++ and AMPI, describe specific capabilities of these systems, and illustrate them with real-world application case studies.

When: 10:00 CST, May 30, 2018 Length: One hour presentation Target Audience: Software developers looking to develop machine independent parallel code. Prerequisites: Parallel programming experience Training and Reference Materials: http://charm.cs.illinois.edu/research/charm Webinar

Youtube: https://youtu.be/GS3m5bEkCL4

|

Skip to Content