IO Libraries

NetCDFNetCDF (Network Common Data Form) is supported and distributed as part of the Cray XE6 Programming Environment. It is a set of software libraries and machine-independent data formats that support the creation, access, and sharing of array-oriented scientific data. Due to the interaction between modules and the Cray compiler wrappers, the steps required to use NetCDF on Blue Waters are somewhat different than those provided in the documentation provided by the NetCDF group. Once the appropriate module is loaded (see below), use the standard Cray "ftn", "cc", and/or "CC" compiler wrappers to build your application. The "netcdf" and "parallel-netcdf" modules, recommended in the standard NetCDF documentation, will not work on Blue Waters. The Cray compiler wrappers will automatically locate the NetCDF include files, link in the appropriate NetCDF libraries, etc... How to use NetCDF% module load cray-netcdf % <--compile code with "ftn", "cc", and/or "CC"--> p-NetCDF (Parallel NetCDF) is a library providing high-performance I/O while still maintaining file-format compatibility with Unidata's NetCDF. There is no conflict between the serial and parallel versions of the NetCDF modules on Blue Waters. How to use p-NetCDF% module load cray-parallel-netcdf % <--compile code with "ftn", "cc", and/or "CC"--> Additional Information

HDF5HDF5 (Hierarchical Data Format version 5) is supported and distributed as part of the Cray XE6 Programming Environment. It is a data model, library, and file format for storing and managing scientific data. Parallel HDF5 is an API to support parallel file access for HDF5 files in a message passing environment. You can use either the serial HDF5 API or the parallel HDF5 API, not both. Due to the interaction between modules and the Cray compiler wrappers, the steps required to use HDF5 on Blue Waters are somewhat different than those provided in the documentation provided by the HDF5 group. Once the appropriate module is loaded (see below), use the standard Cray "ftn", "cc", and/or "CC" compiler wrappers to build your application. The "hdf5" and "hdf5-parallel" modules recommended in the standard HDF5 documentation will not work on Blue Waters. Also, do not use complier wrappers supplied by the HDF5 distribution such as "h5cc" -- these will not work properly on Blue Waters. The Cray compiler wrappers will automatically locate the HDF5 include files, link in the appropriate HDF5 libraries, etc... How to use HDF5 (modules are mutually exclusive)% module load cray-hdf5 % module load cray-hdf5-parallel % <--compile code with "ftn", "cc", and/or "CC"--> NetCDF and HDF5NetCDF and HDF5 can be used simultaneously on Blue Waters. Due to the interaction between modules and the Cray compiler wrappers, the steps required to use NetCDF and HDF5 on Blue Waters are somewhat different than those provided in their respective documentation. Once the appropriate module is loaded (see below), use the standard Cray "ftn", "cc", and/or "CC" compiler wrappers to build your application. The Cray compiler wrappers will automatically locate the appropriate include files, link in the appropriate libraries, etc... How to use NetCDF and HDF5% module load cray-netcdf-hdf5parallel % <--compile code with "ftn", "cc", and/or "CC"--> IOBUFIOBUF is a Cray library provided to improve the i/o performance of programs that do large sequential posix i/o. From the man page:

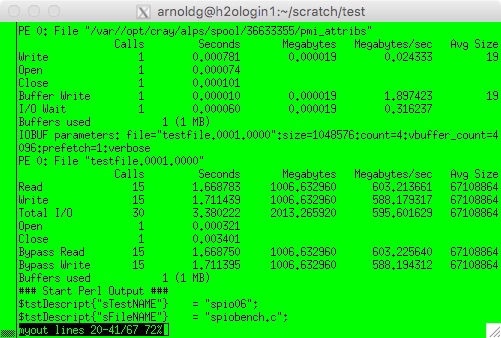

DESCRIPTION How to use IOBUF

Sample output after running with iobuf and the IOBUF_PARAMS above:

MPI-IOMPI-IO is availble via the Cray MPICH implmentation. The MPI-IO layer of the software stack provides a mechanism for optimizing I/O for the user. MPI-IO is based on the ROMIO implementation of the I/O part of MPI. For more information please see the Optimizing MPI-IO on Cray systems document especially on tuning and optimization tricks. Additional Information

|

Skip to Content