|

|

|

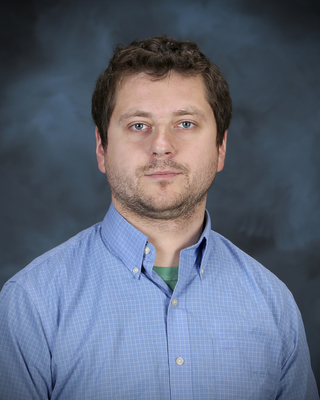

Joe Allen |

|

Joe Allen joined the TACC Life Sciences Computing Group in 2015 where he works

to advance computational biology and bioinformatics research at UT system

academic and health institutions. His research experience spans a range of

disciplines from computer-aided drug design to wet lab biochemistry. He is also

interested in exploring new ways high performance computing resources can be

used to answer challenging biological questions. Before joining TACC, Joe

earned a B.S. in Chemistry from the University of Jamestown (2006), and a Ph.D.

in Biochemistry from Virginia Tech (2011).

|

|

|

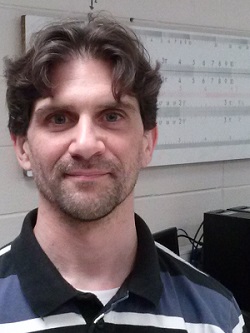

Galen Arnold |

|

Galen Arnold is experienced with high-performance computing (C, C++, Fortran)

and performance analysis/tuning from both application and system perspectives.

As a system engineer with NCSA, Galen enjoys helping people get the most out of

HPC systems such as: Blue Waters and XSEDE systems with accelerators. He's part

of the Blue Waters applications support team as well as the XSEDE software

development and integration testing group. He has a good working knowledge of

most things unix/linux/hpc/networking and has a knack for debugging code. He

believes K&R-C is the one true language but reluctantly admits the superior

numerical performance of Fortran codes.

|

|

|

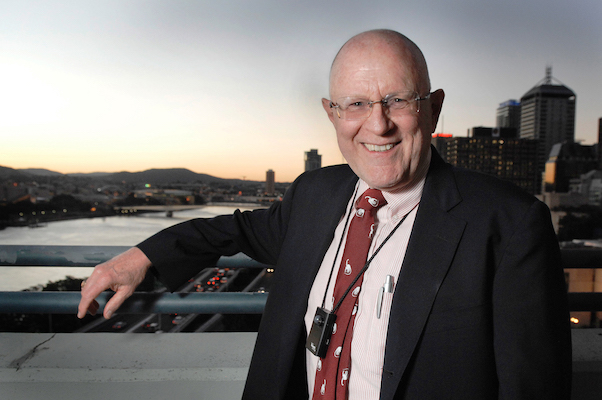

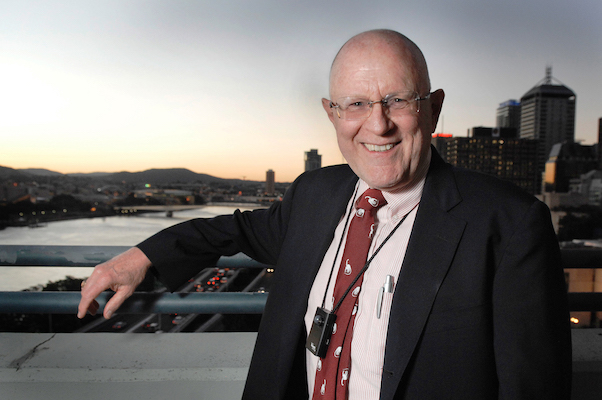

Gordon Bell |

|

Gordon Bell is a Microsoft Researcher (ret). He spent 23 years at Digital

Equipment Corporation as Vice President of R&D, responsible for the first

mini- and time-sharing computers and DEC's VAX, with a 6 year sabbatical at

Carnegie Mellon. In 1987, as the first NSF Assistant Director for Computing

(CISE), he led the National Research and Education Network panel that became

the Internet. Leaving NSF, he established the Gordon Bell Prize to acknowledge

outstanding efforts in computing. Bell maintains three interests: computing,

startup companies, and lifelogging. He is a member or Fellow of the ACM,

AMACAD, IEEE, FTSE (Australia). NAE, and NAS. He received The 1991 National

Medal of Technology. He is a founding trustee of the Computer History Museum,

Mountain View, CA. and lives in San Francisco.

http://gordonbell.azurewebsites.net/

|

|

|

Philip Carns |

|

Philip Carns is a principal software development specialist in the Mathematics

and Computer Science division of Argonne National Laboratory. He is also an

adjunct associate professor of electrical and computer engineering at Clemson

University and a fellow of the Northwestern-Argonne Institute of Science and

Engineering. He received his Ph.D. in computer engineering from Clemson

University in 2005. His research interests include characterization, modeling,

and development of storage systems for data-intensive scientific computing.

|

|

|

Tim Cockerill |

|

Tim Cockerill is TACC's Director of User Services. He oversees the allocations

process by which computing time and storage is awarded on TACC's HPC systems.

The User Services team is also responsible for user account management,

training, and user guides. Tim also currently serves as the DesignSafe Deputy

Project Director, and is involved in TACC's cloud computing projects Chameleon

and Jetstream. Tim joined TACC in January, 2014, as the Director of Center

Programs responsible for program and project management across the Center's

portfolio of awards. Prior to joining TACC, he was the Associate Project

Director for XSEDE and the TeraGrid Project Manager. Before entering the world

of high performance computing in 2003, Tim spent 10 years working in startup

companies aligned with his research interests in gallium arsenide materials and

semiconductor lasers. Prior to that, Tim earned his B.S., M.S., and Ph.D.

degrees from the University of Illinois at Urbana-Champaign and was a Visiting

Assistant Professor in the Electrical and Computer Engineering Department.

|

|

|

Giuseppe Congiu |

|

Giuseppe Congiu is a postdoctoral appointee at Argonne National Laboratory and

staff member of the Programming Models and Runtime Systems (PMRS) group led by

Dr. Pavan Balaji. He has a bachelor degree in electrical and electronic

engineering from the University of Cagliari (Italy) and a Ph.D. in computer

science from Johannes Gutenberg University Mainz (Germany). During his Ph.D. he

mainly worked on parallel I/O and guided I/O for HPC storage systems. Currently

he is working on heterogeneous computing systems, and he also contributes to

the MPICH project.

|

|

|

Niall Gaffney |

|

Niall Gaffney's background largely revolves around the management and

utilization of large inhomogeneous scientific datasets. Niall, who earned his

B.A., M.A., and Ph.D. degrees in astronomy from The University of Texas at

Austin, joined TACC in May 2013. Prior to that he worked for 13 years in the

role of designer and developer for the archives housed at the Space Telescope

Science Institute (STScI), which hold the data from the Hubble Space Telescope,

Kepler, and James Webb Space Telescope missions. He was also a leader in the

development of the Hubble Legacy Archive, projects that harvested the 20+ years

of Hubble Space Telescope data to create some of the most sensitive

astronomical data products available for open research. Prior to his work at

STScI, Niall was worked as "the friend of the telescope" for the Hobby Eberly

Telescope (HET) project at the McDonald Observatory in west Texas where he

started working to create systems to acquire and handle the storage and

distribution of the data the HET produced.

|

|

|

Yanfei Guo |

|

Yanfei Guo received his Ph.D. in computer science from the University of

Colorado at Colorado Springs in 2015. He won a Best Paper award at the

USENIX/ACM International Conference on Autonomic Computing in 2013.

|

|

|

Kevin Harms |

|

Kevin Harms is the I/O Libraries & Benchmarks Lead at the Argonne Leadership Computing Facility at Argonne National Laboratory

|

|

|

Helen He |

|

Helen is a High Performance Computing Consultant at NERSC, Lawrence Berkeley

National Laboratory. She serves as the main user focus point of contact, among

users, systems and vendors staff, for the NERSC flagship systems including the

Cray systems: XT4 (Franklin), XE6 (Hopper), and XC40 (Cori), deployed over the

past 10 years, to ensure them are efficient for scientific productivity. She

specializes in the software programming environment, parallel programming

models such as MPI and OpenMP, applications porting and benchmarking, and

climate models. Helen also serves on the OpenMP Language Committee to represent

Berkeley Lab and has presented OpenMP tutorials at various venues including SC,

XSEDE, and ECP. Helen has been on the Organizing Committee for many important

HPC conference series, such as Cray User Group (Program Chair for 2017, 2018),

SC, HPCS, IXPUG, and OpenMPCon. Helen has a Ph.D. in Marine Studies and an M.S

in Computer Information Science.

|

|

|

Oscar Hernandez |

|

Oscar Hernandez received a Phd in Computer Science from the University of

Houston. He is a staff member of the Computer Science Research (CSR) Group,

which supports the Programming Environment and Tools for the Oak Ridge

Leadership Computing Facility (OLCF). He has experience in working on many

de-facto standards such as OpenMP, OpenACC, OpenSHMEM, and UCX and

benchamarking efforts like SPEC/HPG. At ORNL he works closely with application

teams including the CAAR and INCITE efforts and constantly interacts with them

to address their programming model and tools needs via HPC software ecosystems.

He is currently working on the programming environment for Summit and works

very closely with the vendors on their plans for next-generation programming

models. He has worked on many projects funded by DOE, DoD, NSF, and industrial

partners in the oil and gas industry.

|

|

|

Michael Heroux |

|

Mike Heroux is a Senior Scientist at Sandia National Laboratories, Director of

SW Technologies for the US DOE Exascale Computing Project (ECP) and Scientist

in Residence at St. Johns University, MN. His research interests include all

aspects of scalable scientific, engineering software for new and emerging

parallel computing architectures, and improving scientific software developer

productivity and software sustainability. He leads the IDEAS project, dedicated

to engaging scientific software teams to identify and adopt practices that

improve productivity and sustainability.

|

|

|

Bill Kramer |

|

As the Principal Investigator and Director of the Blue Waters Project, Bill is

responsible for all aspects of development, deployment and operation of the NSF

Blue Waters system, National Petascale Computing Facility and the associated

research and development activities through the Great Lakes Consortium, NSCA

and University of Illinois supporting projects. This involves over 100 staff

for integration and testing efforts for the Blue Waters systems and transition

to full operations. BW is the 20th supercomputer Bill deployed/managed, six of

which were top 10 systems. Bill held similar roles at NERSC and NASA.

|

|

|

Robert Latham |

|

Robert Latham, as a Principle Software Development Specialist at Argonne

National Laboratory, strives to make scientific applications use I/O more

efficiently. After earning his BS (1999) and MS (2000) in Computer Engineering

at Lehigh University (Bethlehem, PA), he worked at Paralogic, Inc., a Linux

cluster start-up. His work with cluster software including MPI implementations

and parallel file systems eventually led him to Argonne, where he has spent the

last 17 years. His research focus has been on high performance I/O for

scientific applications and I/O metrics. He has worked on the ROMIO MPI-IO

implementation, the parallel file systems PVFS (v1 and v2), Parallel NetCDF,

and Mochi I/O services.

|

|

|

Scott Lathrop |

|

Through his position with the Shodor Education Foundation, Inc., Scott Lathrop

is the Blue Waters Technical Program Manager for Education. Lathrop has been

involved in high performance computing and communications activities since

1986, with a focus on HPC education and training for more than 20 years.

Lathrop coordinates the education, outreach and training activities for the

Blue Waters project. He helps ensure that Blue Waters education, outreach and

training activities are meeting the needs of the community. Lathrop has been

involved in the SC Conference series since 1989, served as a member of the SC

Steering Committee for six years, and served as the Conference Chair for the

SC11 and XSEDE14 Conferences. He formed and led the International HPC Training

Consortium for three years, after which it merged within the ACM SIGHPC

Education Chapter during the SC17 Conference. He is an ex-officio officer of

the ACM SIGHPC Education Chapter. Lathrop has been active in the planning and

participation in HPC education and training workshops at numerous conferences

including the SC and ISC Conferences.

|

|

|

Dmitry Liakh |

|

Dmitry Liakh (Lyakh) is a computational scientist at the scientific computing

group at the Oak Ridge Leadership Computing Facility (OLCF) whose main focus is

development and implementation of efficient methods and parallel algorithms for

quantum many-body theory on large-scale heterogeneous HPC platforms, like

NVIDIA GPU accelerated Titan and Summit supercomputers at OLCF. More

specifically, Dmitry has developed GPU accelerated libraries for performing

numerical tensor algebra computations on heterogeneous HPC platforms equipped

with multicore CPU and NVIDIA GPU processors. These accelerated libraries serve

the role of computational engines in software packages targeting quantum

chemistry simulations, quantum computing, and generic multivariate data

analytics workloads. Dmitry has been using the CUDA programming framework for

accelerated computing since 2008. Occasionally he also delivers CUDA

programming training for scientific computing.

|

|

|

Tom Maiden |

|

Tom has worked for over 25 years in the area of High Performance Computing.

Engaged with the user community from day one as a helpdesk consultant, Tom's

focus is and has always been on the end user experience. While user engagement

has remained his focus, HPC training has become his second passion. As part of

the XSEDE Training group, he is the logistical lead and co-developer of the

extremely popular XSEDE HPC Monthly Workshop Series. This series has

successfully delivered a variety of topics to thousands of students across

hundreds of sites using the Wide Area Classroom technology. With one eye to the

future and the other focused on current issues, he is constantly looking to

improve the educational experience for the HPC community.

|

|

|

David Martin |

|

David Martin is Manager, Industry Partnerships and Outreach at the Argonne Leadership Computing Facility at Argonne National Laboratory, where he works with industrial users to harness high performance computing and take advantage of the transformational capabilities of modeling and simulation. David brings broad industry and research experience to ALCF. Prior to joining ALCF, David led IBM's integration of internet standards, grid and cloud computing into offerings from IBM's Systems and Technology Group. Before IBM, David managed networks and built network services for the worldwide high-energy physics community at Fermilab. David began his career at AT&T Bell Laboratories, doing paradigm-changing work in software engineering and high-speed networking. David has a BS from Purdue and an MS from the University of Illinois at Urbana-Champaign, both in Computer Science.

|

|

|

Marta Garcia Martinez |

|

Dr. Eng. Marta Garcia Martinez is Computational Scientist in the Computational

Science Division (CPS) at Argonne National Laboratory and the Program Director

of the Argonne Training Program on Extreme-Scale Computing (ATPESC) since 2015.

Born in Calahorra(Spain), she moved to Zaragoza to study Mechanical Engineering

and had the opportunity to carry out her final project with a Socrates/Erasmus

Grant in the Aerospace and Mechanical Engineering Dept. of the University of

Rome La Sapienza (Italy). She obtained the Degree in Mechanical Engineering,

Centro PolitecnicoSuperior, Zaragoza, (Spain) in 2001. She worked initially at

the Instituto Tecnologicode Aragon, Zaragoza (Spain) as an intern making

acoustic measurements and other viability studies. Then, she was project

manager at INGEMETAL for collaboration between American and Spanish workers for

the construction of the Burke Soleil Cover of the Milwaukee Art Museum

addition. After that project she moved to Toulouse (France) where she worked

for 3 years as Study Engineer at CERFACS, research laboratory where she carried

out her Ph.D. in Fluid Dynamics delivered by the University of Toulouse, INPT,

Toulouse (France) in 2009. Her research activities have been mainly focused on

computational fluid dynamics simulations and high-performance computing. After

receiving her Ph.D. she worked as a postdoctoral fellow in this same laboratory

before joining Argonne National Laboratory in 2010.

|

|

|

Matthew Norman |

|

Norman is a computational climate scientist in the Scientific Computing Group

of Oak Ridge National Laboratory. He obtained two B.S. degrees, one in Computer

Science and another in Meteorology from North Carolina State University (NCSU).

After obtaining a M.S. degree in Atmospheric Science, also from NCSU, he

received the DOE Computational Science Graduate Fellowship (CSGF), and his

doctoral research was performed under Dr. Fredrick Semazzi at NCSU. His

background is in scalable numerical algorithms to efficiently integrate the

PDEs governing atmospheric fluid flow. He also has expertise in the use of

Graphics Processing Units (GPUs) for climate model codes.

|

|

|

Nick Nystrom |

|

Nick Nystrom is Chief Scientist at the Pittsburgh Supercomputing Center (PSC),

which is a joint effort of Carnegie Mellon University (CMU) and the University

of Pittsburgh, and Visiting Research Physicist at CMU. Nick is architect and PI

for Bridges, PSC's flagship system that successfully pioneered the convergence

HPC, AI, and Big Data, and for Bridges-2, to be built in 2020. He is also PI

for the NIH Human Biomolecular Atlas Program (HuBMAP) Infrastructure and

Engagement Component and co-PI for projects that bring emerging AI technologies

to research (Open Compass), apply machine learning to biomedical data for

breast and lung cancer (Big Data for Better Health), and identify causal

relationships in biomedical big data (the Center for Causal Discovery, an NIH

Big Data to Knowledge Center of Excellence). His current research interests

include hardware and software architecture, applications of machine learning to

research data (particularly in the life sciences) and to enhance simulation,

frameworks for improving the sharing and accessibility of data, and graph

analytics.

|

|

|

Marcelo Ponce |

|

Marcelo Ponce is a computational Scientist working at SciNet, the supercomputer

center at the University of Toronto, Canada. Marcelo obtained his PhD in

Astrophysics from the Rochester Institute of Technology, as a member of the

Center for Computational Relativity and Gravitation. Marcelo is one of the core

members of SciNet's education and training team. His interests include:

computational astrophysics, scientific computing and visualization.

|

|

|

Ken Raffenetti |

Ken Raffenetti received his B.S. in computer science from the University of

Illinois at Urbana-Champaign in 2006. He then joined Argonne as a systems

administrator, where he has run the critical computational components in MCS:

web services, virtualization of servers, our desktop Linux infrastructure, and

our configuration management system.

In August 2013, Ken transferred from systems administration to software

development in MCS. He has been actively assisting in the development, testing,

and maintenance of MPICH.

|

|

|

Bill Renaud |

Bill is a User Support Specialist within the User Assistance and Outreach

Group. He graduated from Mississippi State University in 2000 with a degree in

Computer Science. Prior to coming to ORNL in 2005, he worked at the ERDC MSRC

in Vicksburg, MS. Altogether, he has over 17 years experience with the most

powerful computers.

In addition to his user support role, Bill is active in center outreach

providing briefings/tours to OLCF visitors. Additionally, he was part of the

team that designed the Science Trailer for the Computing and Computational

Sciences Directorate, and often attends events at which the trailer is present

to brief visitors on the OLCF.

|

|

|

John E. Stone |

Mr. Stone is the lead developer of VMD, a high performance tool for

preparation, analysis, and visualization of biomolecular simulations used by

over 100,000 researchers all over the world. Mr. Stone's research interests

include molecular visualization, GPU computing, parallel computing, ray

tracing, haptics, virtual environments, and immersive visualization. Mr. Stone

is a frequent presenter and mentor in HPC and exascale computing training

workshops and related "Hackathon" events that aim to give computational

scientists both the knowledge of theory and the practical experience they need

to successfully develop high performance scientific software for

next-generation parallel computing systems. Mr. Stone was inducted as an NVIDIA

CUDA Fellow in 2010.

In 2017, 2018, and 2019 Mr. Stone was awarded as an IBM Champion for Power, for

innovative thought leadership in the technical community. He also provides

consulting services for projects involving computer graphics, GPU computing,

and high performance computing.

|

|

|

Virginia Trueheart |

|

Virginia Trueheart is part of the HPC Applications Group at the Texas Advanced

Computing Center. She works directly with users troubleshooting issues with

jobs and helping them improve their use of TACC machines. She also works to

develop new training materials for users and helps coordinate responses to

users when site wide events occur. Finally, she works directly with HPC

researchers to provide internal support around software maintenance and issue

tracking. Virginia has a Masters in Information Studies from the University of

Texas at Austin.

|

|

|

Ramses van Zon |

|

Ramses van Zon is a high-performance computing applications analyst at the

SciNet HPC Consortium at the University of Toronto in Canada. He obtained his

Ph.D. in Physics at Utrecht University, and has worked on non-equilibrium

statistical physics at the Rockefeller University in New York, and on glassy

systems and molecular dynamics simulations in the Chemical Physics Theory Group

of the University of Toronto. He has extensive experience with distributed and

shared memory computing, advanced algorithms for molecular dynamics,

bioinformatics computations and workflows. He coordinates and takes part in the

SciNet training and education program, and provides support to users on code

optimization, application porting, workflows, improving efficiency, and

parallel programming.

|

|

|

Woo-Sun Yang |

|

Woo-Sun Yang has been a HPC consultant at NERSC (National Energy Research

Scientific Computing Center) since 2008, where he provides consulting support

to NERSC users on various issues with parallel and numerical computing. He

regularly gives training to users on using various parallel debugging and

performance profiling tools on supercomputers. He received a Ph.D. degree in

Geology from University of Illinois at Urbana-Champaign where he studied

thermal convection in planet's mantle with a parallel finite element numerical

model.

|

|