Through December 19, 2019:

Through December 19, 2019:

Through December 19, 2019:

|

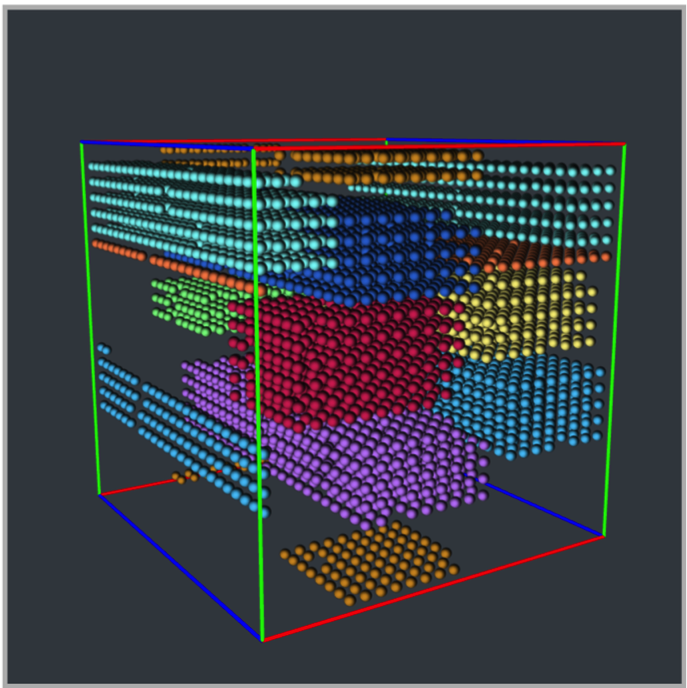

Submitting jobsqsub PBS header changes This is a 1024-node XE job. The shape of this job is 11x2x24, and the start location is 3.6.0. The shape consists of 28 service nodes (2.65%) and 3 down nodes (available via checkjob).

8x6x9 starting at 16.10.8 with 20 service nodes and 1 down node.

Host list syntax: qsub -l hostlist=host+host+... $ qsub -l hostlist=host%tasks+host%tasks+ Example: (host 12,13,14,15) qsub -l hostlist=12%32+13%32+14%32+15%32 *Warning about using host lists with Topology Aware Scheduler: If a hostlist is specified without an explicit geometry, the scheduler will treat the job as if the bounding box of the specified nodes had been requested as the geometry. If a sparse list is provided, the bounding box will likely include an idle node count beyond threshold, which will result in the job state being set to "batchhold".

Querying Job Statesqstat - no change showq - no change checkjob TAS aware checkjob <jobid> now has topology related information. The following is an example of a 40-node XK job that got a reservation. Total Requested Tasks: 40 Req[0] TaskCount: 40 Partition: ALL Opsys: --- Arch: --- Features: xk Reserved Nodes: (14:35:27 -> 1:14:35:27 Duration: 1:00:00:00) [9402:1][9403:1][9400:1][9401:1][9398:1][9399:1] [9396:1][9397:1][9394:1][9395:1][9028:1][9029:1] [9030:1][9031:1][9032:1][9033:1][9034:1][9035:1] [9036:1][9037:1][7098:1][7099:1][7096:1][7097:1] [7094:1][7095:1][7092:1][7093:1][7090:1][7091:1] [6724:1][6725:1][6726:1][6727:1][6728:1][6729:1] [6730:1][6731:1][6732:1][6733:1] QOS Flags: ALLOWCOMMFLAGS,ALLOWGEOMETRY,ALLOWWRAP Candidate Shapes: 4x1x5 5x1x4 Placement: 4x1x5@16.3.2 Internal Frag: 0/40 (0.00%) Down Nodes: 0 Service Nodes: 0 (0.00%) Ideal App Cost: 2.28 Actual App Cost: 2.28 Total Cost: 4.95 Dateline Zones: Job crosses zone boundaries: Z+ Z- showres - no change (NCSA to wrap) Backfillshowbf showbf reports the largest generated shape that fits each backfill window. showbf -G can be used to limit the display of backfill windows to those that can accommodate the given geometry. For example, showbf -G 3x3x3 shows information about each backfill window that can accommodate a job in a 3x3x3 shape. showbf -p nid11293 Partition Tasks Nodes Duration StartOffset StartDate Geometry --------- ------ ----- ------------ ------------ -------------- -------- nid11293 3584 224 23:44:33 00:00:00 22:50:05_10/29 8x2x7 nid11293 1920 120 1:15:44:33 00:00:00 22:50:05_10/29 5x2x6 nid11293 1600 100 INFINITY 00:00:00 22:50:05_10/29 5x2x5 showbf -n 200 Partition Tasks Nodes Duration StartOffset StartDate Geometry --------- ------ ----- ------------ ------------ -------------- -------- ALL 3584 224 23:57:59 00:00:00 22:50:41_10/29 8x2x7 nid11293 3584 224 23:57:59 00:00:00 22:50:41_10/29 8x2x7

showbf -G 3x3x3 Partition Tasks Nodes Duration StartOffset StartDate Geometry --------- ------ ----- ------------ ------------ -------------- -------- ALL 864 54 23:56:03 00:00:00 22:52:37_10/29 3x3x3 nid11293 864 54 23:56:03 00:00:00 22:52:37_10/29 3x3x3 Find xk nodes via showbf: showbf -f xk -p nid11293 Partition Tasks Nodes Duration StartOffset StartDate Geometry --------- ------ ----- ------------ ------------ -------------- -------- nid11293 9088 568 14:49:02 00:00:00 14:10:58_11/05 9x4x8 |